First steps in R

Bioinformatics and Biostatistics Hub, Computational Biology Department

1 Get ready for the course

Before the course starts, you should have installed R and RStudio on your computer (see below). RStudio is highly recommended, especially if you are a beginner.

1.1 About R

This course will use a programming language oriented towards data analysis and statistics: R.

It can be useful for:

- Publication ready graphs

- Biological analysis libraries

- Statistical testing

- Many more: table reformatting, maps, interactive visualizations, etc…

R can be used in an interactive console: typing and executing commands one by one.

Another way to work with R is to prepare a list of commands in a text file (a script, which is a kind of program) and then tell R to execute them. This is an excellent way to have reproducible and well documented analyses.

1.2 About RStudio

RStudio is an application in which you can load data, execute R commands, prepare scripts, get information on commands and environments (the current state of your work), visualize graphics and more.

You do not need RStudio to work with R, but it will make your life easier during this course.

1.3 Installing R

R can be obtained from the following site: https://cran.rstudio.com/

Try to install version 3.5 or above (and preferably at least 3.6). If your operating system provides R already, but in a slightly earlier version, most things in the present course will probably work fine, but this might not be true for later R-based courses, and you will run into trouble sooner or later.

For those using a Debian or Ubuntu based Linux, we suggest reading the following material before proceeding to install R: https://github.com/blaiseli/R_install_notes/blob/master/Install_Linux.md. Some of the content may also be helpful for users of other types of Linux distributions.

Windows users should refer to the following page: https://cran.rstudio.com/bin/windows/base/

Mac OSX users should refer to this one: https://cran.rstudio.com/bin/macosx/

1.4 Installing RStudio

You can obtain RStudio from this page: https://www.rstudio.com/products/rstudio/download/

Choose the free version (“RStudio Desktop, Open Source Licence”).

Download and run the “installer” suitable for your operating system.

1.5 Course material

- The most up-to-date version of the present course support should be available at http://hub-courses.pages.pasteur.fr/R_pasteur_phd/First_steps_RStudio.html

- If you spot typos, errors, ambiguities, etc., you can sign in on our local gitlab server using your Pasteur ID (if you are a member of the Institut Pasteur) or using a github account to submit an issue here: https://gitlab.pasteur.fr/hub-courses/R_pasteur_phd/issues

- You can get the full course material (html page, data for the exercises, and more) in the following zipped archive (recommended): http://hub-courses.pages.pasteur.fr/R_pasteur_phd/2020-03/First_steps_RStudio.zip

The course contains small exercises (indicated with  ) to help you memorize the techniques we present. Try to search by yourself, then discuss with your neighbours (if you want), before clicking on the “Hint” buttons. There are often several ways to solve a problem when programming. If you come up with solutions other than the ones we propose, while still using techniques already seen in the course, don’t hesitate to share them with us.

) to help you memorize the techniques we present. Try to search by yourself, then discuss with your neighbours (if you want), before clicking on the “Hint” buttons. There are often several ways to solve a problem when programming. If you come up with solutions other than the ones we propose, while still using techniques already seen in the course, don’t hesitate to share them with us.

The buttons with the  icon open sections that will not be treated during the course. Feel free to study them when you have free time (if the course pace is too slow for you, for instance).

icon open sections that will not be treated during the course. Feel free to study them when you have free time (if the course pace is too slow for you, for instance).

Here’s one already!

The material for the other modules of the course is (or will soon be) available at https://c3bi.pasteur.fr/bioinformatics-program-for-phd-students-2019-2020/.

2 Smooth introduction to R

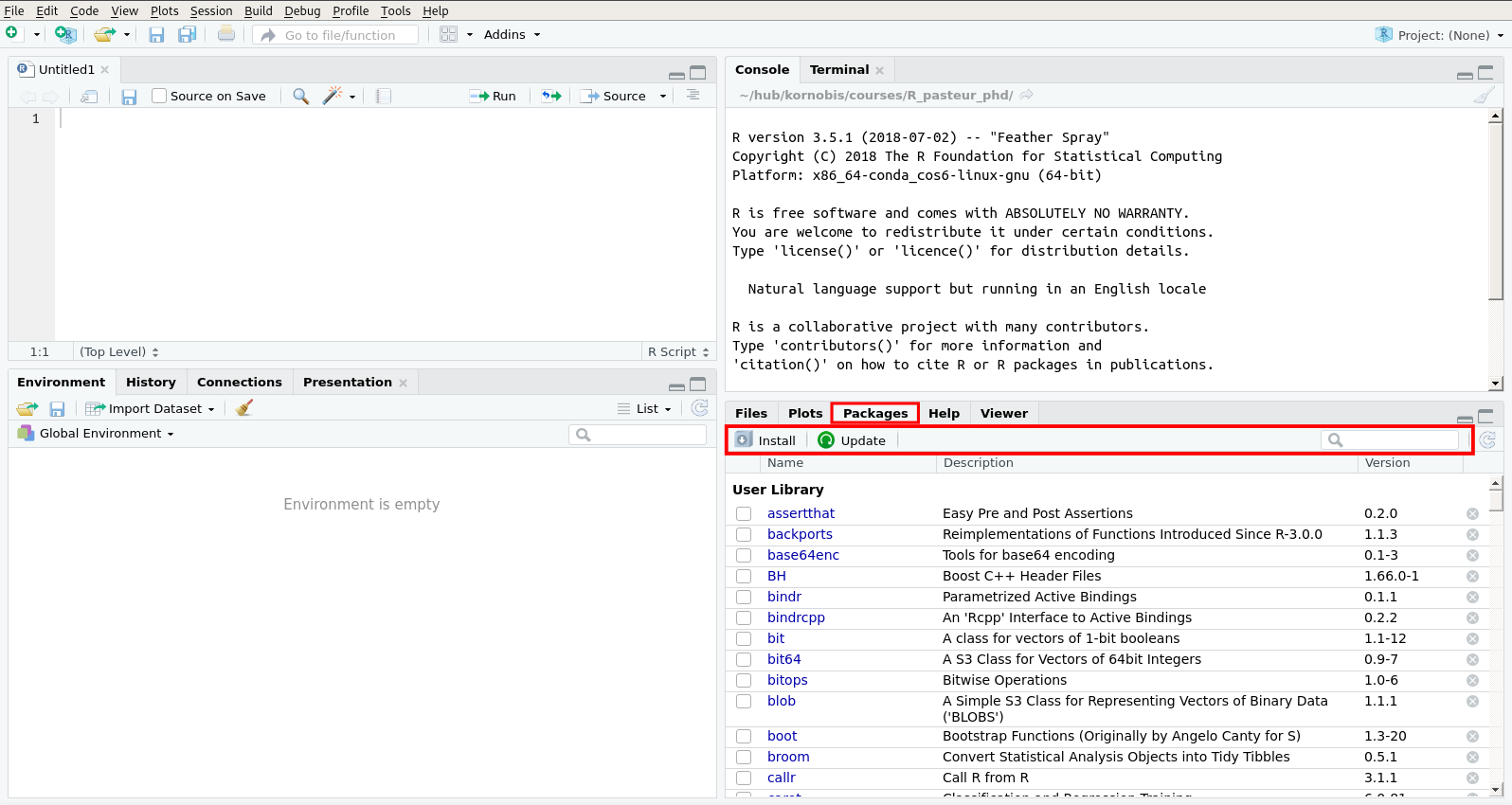

2.1 The RStudio interface

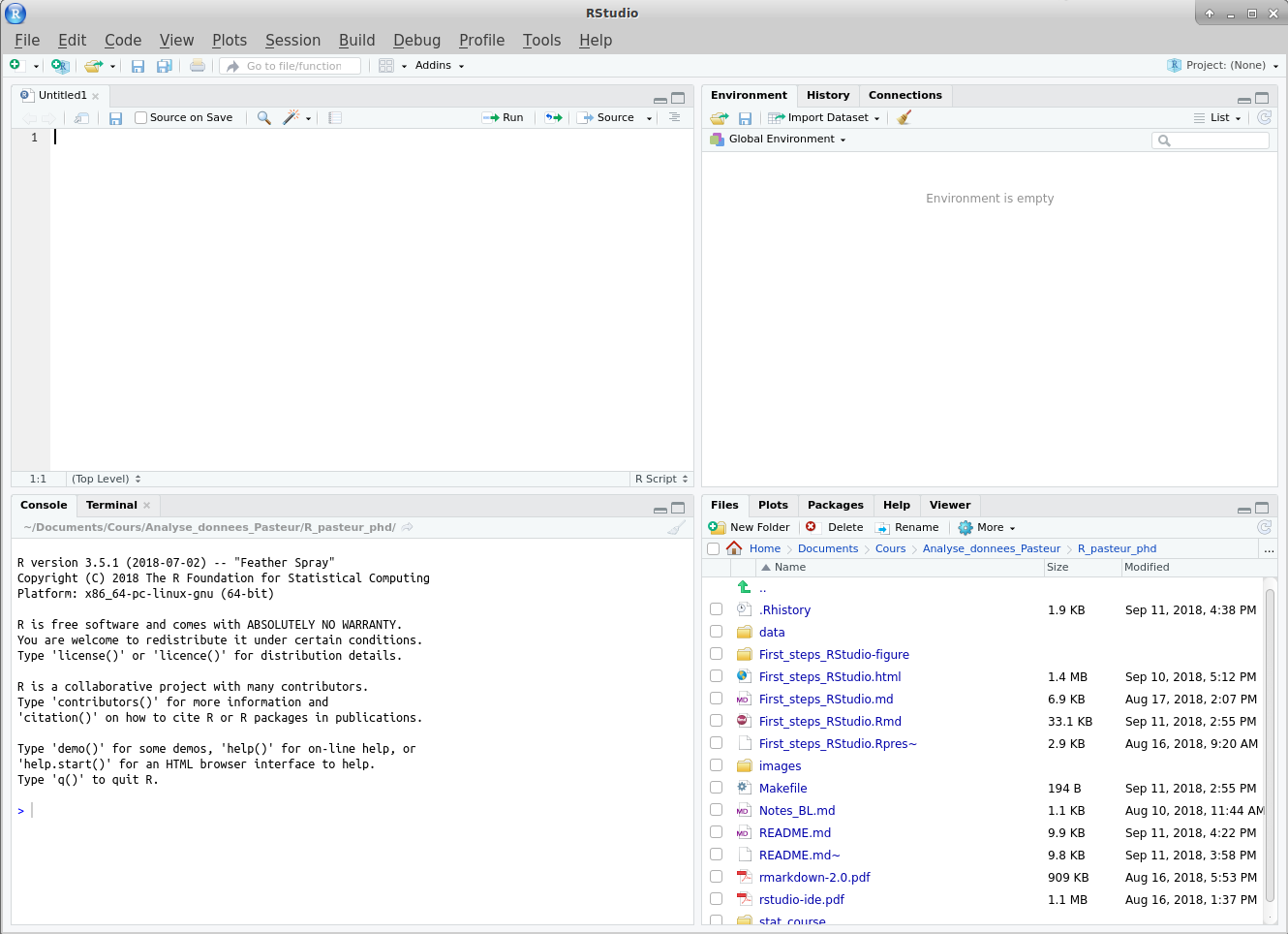

By default, RStudio opens with three or four panes, each containing several tabs:

- The top-left pane (if present, otherwise click

) is the editor, where you can write scripts, and other types of documents. This is also where data frames can be visualized.

) is the editor, where you can write scripts, and other types of documents. This is also where data frames can be visualized. - The bottom-left one contains the “Console”, where you can directly type R commands.

- The top-right one contains information about the “Environment”: values currently defined in your R session.

- The bottom-right one contains various tabs, including the following ones:

- a file explorer

- a help viewer

- a plot visualizer

- a package manager (packages are a way to extend the functionalities of R).

2.2 Loading data in RStudio

A frequent use of R is to analyse data stored as tables in files.

2.2.1 Quick dive in Milieu Intérieur dataset

The “Milieu Intérieur” project is an ambitious population-based study coordinated by the Institut Pasteur, Paris. The objective is to dissect the interplay between genetics and environment and their impact on the immune system (https://research.pasteur.fr/en/program_project/milieu-interieur-labex/).

Download the Milieu Intérieur dataset from http://hub-courses.pages.pasteur.fr/R_pasteur_phd/data/mi.csv, and remember where in your computer it was saved.

The “Environment” tab of the top-right pane of RStudio contains an “Import Dataset” button ( ) that can be used to load various kind of files.

) that can be used to load various kind of files.

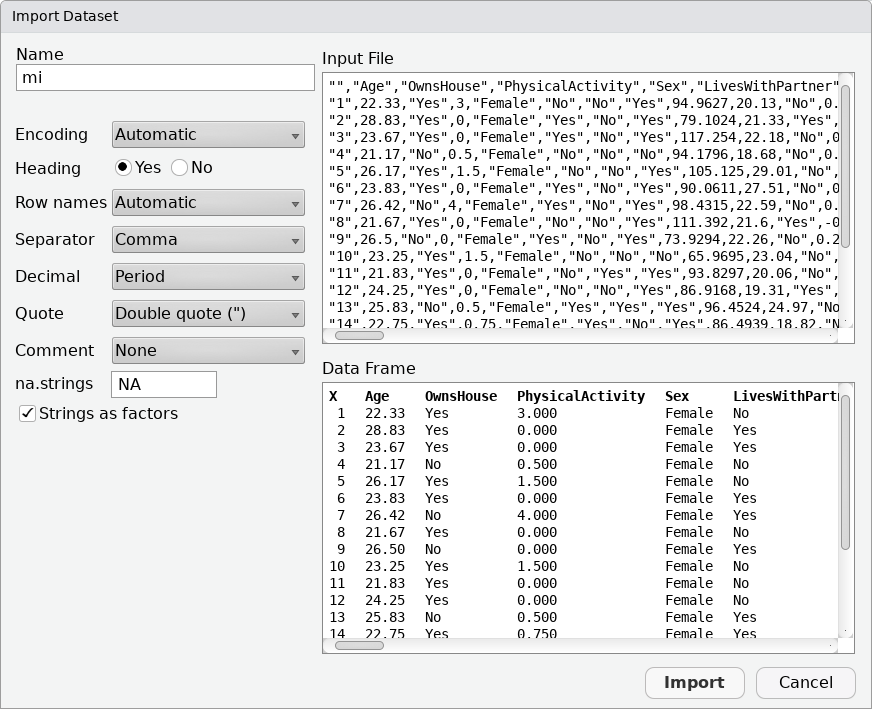

Open the “Import Dataset” menu and choose “From Text (base)…”.

A file browser will open. Select the file you just downloaded.

You should see a window presenting you options related to how the content of the file should be interpreted, similar to the following:

Most default values should be OK. Be sure that the “Heading” option is set to “Yes” and select “Use first column” for the “Row names” option, then click on the “Import” button.

A tab should open in the top-left pane displaying the content of the file. These data represent information pertaining to individuals that participated to the “Milieu Intérieur” study. Lines correspond to individuals (“observations”), and columns to various pieces of information obtained for them (“variables”).

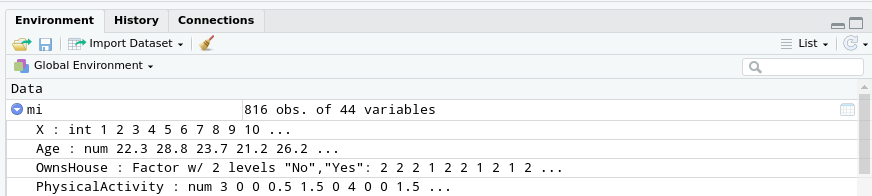

In the “Environment” tab of the top-right pane of RStudio, you have access to more information about this table:

- next to the name of the table (

mi, if you used the default choice at import), the number of observations and variables in the table; - below (if you click on the arrow next to the name of the table), a listing describing how the data for each column is represented in R.

You can see that the information consists in numerical and textual information.

What we obtained by loading this file is called a “data frame” in R. A lot of things can be done using data frames, and we’ll learn some of it later in this course.

Briefly, and to wet your appetite, a specific column of a data frame can be accessed by using the name of the data frame (here mi), a dollar sign $ and the name of the desired column (here for example Age):

mi$Age [1] 22.33 28.83 23.67 21.17 26.17 23.83 26.42 21.67 26.50 23.25

[ reached getOption("max.print") -- omitted 806 entries ]You want to visualize the age distribution of this dataset ? To plot a histogram, just use the hist function:

hist(mi$Age)A function is a predefined set of computations that can be re-executed, possibly with variations, depending on its arguments (the things given between the parentheses). Pre-defined functions, like hist help make R very useful for non-programmers.

But we are getting ahead of ourselves and we will see more on functions, data frames manipulation and data visualization later on\(\dots\) Before being able to actually analyse data, we will have to learn about the various ways used to represent data in R, and what kind of things can be done with data on a basic level.

2.3 R as a calculator

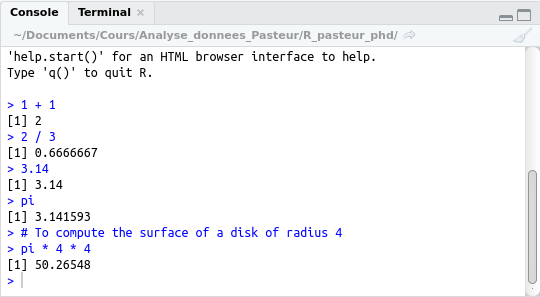

In the “Console” part of RStudio, you can see a prompt: This > symbol invites you to type commands.

Try the following (validate using the “return” key after typing):

> 1 + 1[1] 2It seems R gave the correct result (ignore the [1] for now).

Note that some complicated calculations may take a non-negligible time. A  button should be visible until the calculation ends. If you suspect something is going wrong, use it to interrupt the calculation.

button should be visible until the calculation ends. If you suspect something is going wrong, use it to interrupt the calculation.

Once R is ready to take new commands, you should see a prompt (>) again in the console.

You can of course also subtract (-), multiply (*) and divide (/). Feel free to experiment with the usual mathematical operators.

2.4 Numbers

Divisions may give answers with a dot as decimal separator:

> 2 / 3[1] 0.6666667 The result can be rounded for the display. The actual value may also sometimes not be mathematically exact. This is due to limitations inherent to the way numbers are encoded by the computer.

The result can be rounded for the display. The actual value may also sometimes not be mathematically exact. This is due to limitations inherent to the way numbers are encoded by the computer.

Use a dot, not a comma when writing numbers with decimals (called double in R):

> 3.14[1] 3.14By the way, R knows about \(\pi\):

> pi[1] 3.141593Scientific notation is allowed, and could be useful:

> 5e-3[1] 0.0052.5 Variables

2.5.1 Defining and using variables

Like the predefined pi, you can temporarily “save” values in the computer memory by associating them to a name:

> my_variable <- 2 + 2Some people tend to use the equal sign (=) instead of the above left-pointing arrow (<-). Both work in R.

You can then use the corresponding value by simply typing the associated name:

> my_variable[1] 4> my_variable * 5[1] 20This is called a variable: the associated value can be updated by later commands:

> my_variable <- 5

> my_variable[1] 5> my_variable <- 3

> my_variable[1] 3> my_variable <- 2 * 3

> my_variable[1] 6 By the way, you can re-use the previous commands in the console by navigating the “command history” using the up and down arrows of your keyboard. You can then modify the selected command if needed (use the left and right arrows to get to the part you want to edit).

By the way, you can re-use the previous commands in the console by navigating the “command history” using the up and down arrows of your keyboard. You can then modify the selected command if needed (use the left and right arrows to get to the part you want to edit).

Note that the older values are now lost (variables are also lost when you quit or restart R).

You will use variables a lot. You can use them to store many different types of values that we will see later. For instance, the “Milieu Intérieur” data frame we previously loaded from a file is stored using a variable named mi.

Note that this notion of “variable” (in the “computer programming” sense) is not exactly the same as the one we used when referring to columns in the data frame, which is more a notion belonging to the data science field.

Note that this notion of “variable” (in the “computer programming” sense) is not exactly the same as the one we used when referring to columns in the data frame, which is more a notion belonging to the data science field.

You can even store functions (sequences of commands that can be re-used).

You can use a variable as many times as you wish in a calculation, and you can even use it to create another variable:

> radius <- 4

> area <- pi * radius * radius

> area[1] 50.26548 Another handy feature provided by RStudio is code completion. You will notice it after typing three characters: a pop-up will appear giving you the variables and functions starting with these characters and available in the current environment. This pop-up is giving you a brief description as well. You can make the code completion pop-up also appear by pressing the

Another handy feature provided by RStudio is code completion. You will notice it after typing three characters: a pop-up will appear giving you the variables and functions starting with these characters and available in the current environment. This pop-up is giving you a brief description as well. You can make the code completion pop-up also appear by pressing the TAB key (the one with a kind of arrow, located at the left of the first row of letters of most computer keyboards).

You can also see the variables you defined and their current value in the “Environment” tab (top-right pane).

2.5.2 Variable naming rules

Variable names need to conform to certain rules. You should be relatively safe if you stick to the following:

- Use only:

- plain ASCII letters (“A” to “Z” and “a” to “z”);

- numbers;

- underscore ("_").

- Don’t start a variable name with underscore or a number.

- Avoid single letter unless meaning is obvious.

- Avoid using names that already exist (that’s the tricky part).

This is OK:

> five_point_6 <- 5.6This is not (try it, you will get an error):

> 6_times_4 <- 24## Error: <text>:1:2: unexpected input

1: 6_

^You can do this, but you shouldn’t:

> pi <- 3.14If you did it nonetheless (you fool…) the older and more accurate value of pi is still available as base::pi.

The names of your variables should help you and others understand what the code is supposed to do.

The names of your variables should help you and others understand what the code is supposed to do.

You will sometimes see dots (.) in variable names. This is allowed in R, but in many programming languages, dots have special meanings. You’d better avoid taking the habit of using dots in variable names. This will make the transition less painful if you ever decide to learn other languages.

2.6 Intermission: Scripts in RStudio

2.6.1 Getting organized

Above the console, there is a space where you can take note of the commands you want to remember, and save this to a (plain text) file.

This is actually intended to create a script. A script is a file containing a succession of commands that can be executed to do all the corresponding computations. Just put a # before the parts that are not valid R commands.

This is called “commenting”, and it is a good practice to put such comments in your scripts, especially in parts that took you time to get right. That helps you remember what your intentions were when you wrote the commands (and can also help others, if you share your scripts).

This is called “commenting”, and it is a good practice to put such comments in your scripts, especially in parts that took you time to get right. That helps you remember what your intentions were when you wrote the commands (and can also help others, if you share your scripts).

(You can use the  button to create a new script or in the menu “File” > “New File” > “R Script”.)

button to create a new script or in the menu “File” > “New File” > “R Script”.)

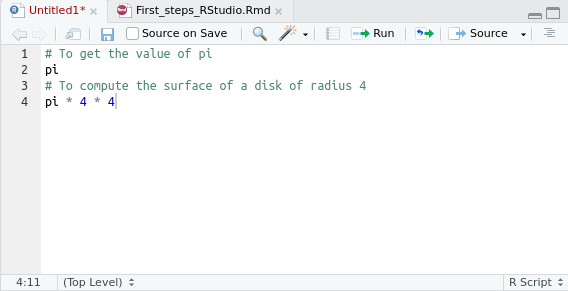

Small script example:

2.6.2 Executing saved scripts

Execute code on the current line (or block of selected lines) in the console below (shortcut: (Ctrl or Cmd) + Enter).

Execute code on the current line (or block of selected lines) in the console below (shortcut: (Ctrl or Cmd) + Enter). Execute the whole script in the console below (shortcut: (Ctrl or Cmd) + Shift + Enter).

Execute the whole script in the console below (shortcut: (Ctrl or Cmd) + Shift + Enter).

(You can also execute an R script outside RStudio, using the Rscript command from a command line interface.)

Example of execution of the current line:

(The preceding comments have also been “executed”: they appear in the console, but have no other effects.)

2.7 Truth values (“Booleans”)

You can compare numbers using comparison operators:

3 * 2 > 4[1] TRUE1 <= -3[1] FALSEDouble equal == is used to test equality:

5 == 2 + 3[1] TRUE!= (not equal) is used to test inequality:

1 + 3 != 4[1] FALSEThe result of a comparison is a value that tells you whether what you wrote is true or not. Such values are called Boolean values, or “Booleans” (after the logician George Boole).

They must be written in capitals:

TRUE[1] TRUEFALSE[1] FALSEOtherwise, R looks for a variable with that name:

true## Error: object 'true' not found Don’t name your variables with names such as

Don’t name your variables with names such as true or false, you might get bitten.

Code failing with an error message is often better than undetected mistakes.

Code failing with an error message is often better than undetected mistakes.

2.7.1 Boolean operations

You can use Booleans to compute more Booleans, using logical operators:

TRUE & FALSE # And[1] FALSETRUE | FALSE # Or[1] TRUE!FALSE # Not[1] TRUEFALSE & ((1 == 1) | TRUE)[1] FALSEIn the last command, we mix comparison expressions (that have Boolean results) with “literal” Booleans (FALSE and TRUE) The whole result has a Boolean value.

Expressions can be composed of a hierarchy of expressions combined with operators. Operators have precedence rules, telling what operations should be evaluated first.

To be sure that expressions will be evaluated in the intended order, we can use parentheses in complex expressions, as above. Here is a decomposition of the evaluation:

1 == 1is TRUE, therefore((1 == 1) | TRUE)isTRUE.- This expression is hence equivalent to

FALSE & TRUE, which isFALSE.

(Parentheses can be used in any expression, not just in Boolean calculations.)

2.8 Strings (text)

Computer programs are much more useful for humans if they can communicate with them using text, and let them manipulate text, and not just simple mathematical objects such as numbers or Booleans. Indeed, text is a powerful tool to convey complex information. Text can be used to encode information in various ways.

Fortunately, R offers (and heavily uses) the possibility to represent text.

We can define character strings (or just “strings”) variables to represent text, placing it "between quotes":

selected_color <- "blue"

file_name <- "counts.csv" Note that there are no quotes when one writes

Note that there are no quotes when one writes TRUE, FALSE or a variable name. This is a very important difference to keep in mind.

We will see later what can be done with strings.

3 Vectors

3.1 Creating or getting vectors

You can group values of the same type in a vector, using c (combine).

Numbers:

my_numbers <- c(6, -1, 0, 6)

my_numbers[1] 6 -1 0 6Strings:

phenotype <- c("round", "wrinkled", "round", "wrinkled")

phenotype[1] "round" "wrinkled" "round" "wrinkled"Booleans:

tests <- c(TRUE, FALSE, FALSE, TRUE)

tests[1] TRUE FALSE FALSE TRUEOf course, values in a vector can be the result of a computation:

c(FALSE, 1 == 1, 2 != 2)[1] FALSE TRUE FALSEThey can be variables too:

a <- 2

b <- 3 + 4

c(a, b)[1] 2 73.1.1 Named vectors

There is a potentially nice feature in R that string-capable people like you can benefit from: named vectors.

Elements in a vector can indeed have names.

To have a named vector, you can give names to its elements at creation time, by prefixing each element with the desired name and an equal sign:

mix <- c(buffer=2.5, MgCl2=0.75, dNTP=0.5, primer1=0.5, primer2=0.5, water=20.125, Taq=0.125)The names are then displayed above the corresponding values when you display the vector:

mix buffer MgCl2 dNTP primer1 primer2 water Taq

2.500 0.750 0.500 0.500 0.500 20.125 0.125 3.1.2 Automatic conversions

Be careful. Vectors can only contain values of the same nature. If you try to create a vector of values of mixed types, R will automatically convert values in a way that may or may not be what you want. You will not get warnings.

Be careful. Vectors can only contain values of the same nature. If you try to create a vector of values of mixed types, R will automatically convert values in a way that may or may not be what you want. You will not get warnings.

c(1, "two", 3, "four")[1] "1" "two" "3" "four"c(TRUE, "OK", FALSE, "Ouch!")[1] "TRUE" "OK" "FALSE" "Ouch!"c(1, FALSE, "three")[1] "1" "FALSE" "three"In the above cases, there is no reasonable way arbitrary words can be interpreted as numbers or Booleans. The only option left is to turn everything into strings (just add quotes!).

Guess what happens when you just combine numbers and Booleans?

Guess what happens when you just combine numbers and Booleans?

3.1.3 Sequences of numbers

There’s an useful shortcut to create a vector of successive numbers:

2:7[1] 2 3 4 5 6 7a:b[1] 2 3 4 5 6 7-4:4[1] -4 -3 -2 -1 0 1 2 3 4You can even generate decreasing series:

0:-5[1] 0 -1 -2 -3 -4 -53.1.4 Extracting columns from a data frame

If you remember well, we saw how to get a column from our

If you remember well, we saw how to get a column from our mi data frame using the $ sign (mi$Age, for instance). Data frame columns are actually vectors. Try to save the ages from that data frame in an ages variable.

3.2 Vectorized operations

Certain operations can be applied on all the values in a vector simultaneously:

1:5 + 2[1] 3 4 5 6 7!c(FALSE, TRUE, TRUE, FALSE)[1] TRUE FALSE FALSE TRUEc(6, 4, 2, 1, 3, 5) < 4[1] FALSE FALSE TRUE TRUE TRUE FALSEYou can use this to easily compute the quantities of reagents when preparing a mix in the lab, if you have the recipe for a certain amount of mix (as stored in the mix variable previously) and want another amount:

original_mix_amount <- 25

desired_mix_amount <- 20

mix * desired_mix_amount / original_mix_amount buffer MgCl2 dNTP primer1 primer2 water Taq

2.0 0.6 0.4 0.4 0.4 16.1 0.1 Easy parallelized cross-multiplication!

These vectorized operations are very powerful, especially when dealing with large amounts of data. They are widely used by R practitioners, and you will use them during this course.

These vectorized operations are very powerful, especially when dealing with large amounts of data. They are widely used by R practitioners, and you will use them during this course.

3.3 Operations between vectors

It is also possible to apply operations between two vectors.

c(1, 2, 3, 4) + c(2, 4, 6, 8)[1] 3 6 9 121:3 * 1:3 # equivalently: (1:3) ^ 2[1] 1 4 9The result is a vector in which the values are obtained by applying the operation on elements at the corresponding positions in the original vectors.

3.3.1 Value recycling

Now try to combine vectors of different sizes. Does it work? If yes, what happens?

Now try to combine vectors of different sizes. Does it work? If yes, what happens?

Suppose we have the following vectors:

v1 <- 1:6

v2 <- 1:3 Without entering the computation in R, think what would be the result of

Without entering the computation in R, think what would be the result of v1 <= v2?

3.4 Combining vectors

You can add one or more values to a vector using c:

euro_bikes <- c("Pinarello", "BMC")

amer_bikes <- c("Cannondale", "Kona", "Specialized")

bikes <- c(euro_bikes, amer_bikes)

bikes[1] "Pinarello" "BMC" "Cannondale" "Kona" "Specialized"(This operation is sometimes called “concatenation”.)

It is also possible to prepend or append just one value to a vector:

c("Peugeot", bikes)[1] "Peugeot" "Pinarello" "BMC" "Cannondale" "Kona"

[6] "Specialized"c(bikes, "Raleigh")[1] "Pinarello" "BMC" "Cannondale" "Kona" "Specialized"

[6] "Raleigh" 3.5 Indices and length

Ask R to display a looong vector:

21:60 [1] 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45

[26] 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60The numbers between square brackets are here to help us count the number of elements. These are indices of the elements beginning a displayed line: 21 is the 1\(^{st}\) element, 44 is the 24\(^{th}\).

One can get the number of elements in a vector using the predefined length function:

length(21:60)[1] 40Functions are executed by writing their names followed by opening and closing parentheses. Between parentheses are the arguments of the function, if any. Here, we give a vector to the length function, and it gives back a result, which is the number of elements in the vector.

We’ll see more functions later. We have actually already seen another one: c is a function that can have more than 1 argument.

And another very useful function is sum:

sum(c(1, 1, 1))[1] 3 Given the following vector, can you think of a way to compute the number of elements above 5?

Given the following vector, can you think of a way to compute the number of elements above 5?

nums <- c(3, 7, 2, 3, 2, 8, 6, 2, 6)3.6 Accessing vector elements

3.6.1 Access by index

One can take elements in a vector using square brackets and their indices:

ages_toy <- c(34, 3, 18, 5, 56, 41, 23, 101)

ages_toy[3] # one element[1] 18ages_toy[3:6] # a slice[1] 18 5 56 41ages_toy[c(8, 6, 4, 2)] # elements in arbitrary order[1] 101 41 5 3 How would you get the last element?

How would you get the last element?

One can also change the value of an element:

ages_toy[1] 34 3 18 5 56 41 23 101# It's the little child's birthday!

ages_toy[2] <- ages_toy[2] + 1

ages_toy[1] 34 4 18 5 56 41 23 101(Notice how the second element has been incremented.)

You will encounter other similar situations in R, where the same syntax can be use either to get or set a value.

We previously constructed a vector containing two European bicycle brands first, then three US brands.

We previously constructed a vector containing two European bicycle brands first, then three US brands.

bikes[1] "Pinarello" "BMC" "Cannondale" "Kona" "Specialized"Try to insert the Taiwanese brand "Giant" between the two parts.

3.6.2 Boolean sub-setting

A vector of Booleans can be used to extract portions of another vector:

ages_toy[1] 34 4 18 5 56 41 23 101ages_toy[c(

TRUE, FALSE, FALSE, FALSE,

TRUE, TRUE, FALSE, TRUE)][1] 34 56 41 101Only those values at positions where the vector of Booleans contains TRUE are selected.

This can be very useful, because a vector of Booleans can be obtained by applying to a vector an operation that results in a Boolean:

is_old <- ages_toy > 30

is_old[1] TRUE FALSE FALSE FALSE TRUE TRUE FALSE TRUEYou can therefore easily select the ages of the old guys in one command:

ages_toy[is_old][1] 34 56 41 101You can write this directly in one step:

ages_toy[ages_toy > 30][1] 34 56 41 101An interesting function is which, that gives the indices of the TRUE elements in a Boolean vector:

which(is_old)[1] 1 5 6 8ages_toy[which(is_old)][1] 34 56 41 101This is actually equivalent to ages_toy[is_old], but more explicit, in a sense (sub-setting is done using indices).

3.6.3 Access by name

If you remember well, we saw earlier that it was possible to create vectors where elements are named.

When elements of a vector are named, they can be accessed by their name (represented as a string, i.e. between quotes):

composition <- c(Ca=73, Mg=2, Na=4.5, K=1.3)

composition["Na"] Na

4.5 composition[c("Mg", "Ca")]Mg Ca

2 73 The result is a named vector.

Accessing elements by indices also works:

composition[4] K

1.3 Let’s practice a little more with this useful feature.

Suppose that (for those not interested in PCR mixes), we have a cooking recipe, but that we got the quantities and names of the ingredients separately:

# Recette des cannelés du "professeur Bécavin de la liste bioinfo"

cake_recipe <- c(225, 1, 2, 1, 1, 125, 500)

cake_ingredients <- c("sugar", "butter", "eggs", "yolk", "vanilla", "flour", "milk")It is possible to add names to a vector after creation, assigning a vector of strings to the names, as follows:

names(cake_recipe) <- cake_ingredients

cake_recipe sugar butter eggs yolk vanilla flour milk

225 1 2 1 1 125 500 The names are preserved by some operations. Now, suppose our recipe serves 12 people.

How much flour and milk would we need for 25 people?

How much flour and milk would we need for 25 people?

3.7 Useful vector-generating functions

3.7.1 Repeating values

The rep function generates a vector by repeating another vector a certain amount of times:

rep(TRUE, times = 3) # same as c(TRUE, TRUE, TRUE)[1] TRUE TRUE TRUErep(1:2, times = 4) # same as c(1:2, 1:2, 1:2, 1:2)[1] 1 2 1 2 1 2 1 2times is an optional argument. By default, the first argument is repeated just once: the default value of this argument is 1. If we want the value of the first argument to be repeated more than once, we need to give a different value to the times argument, with the = sign.

A function can have several optional arguments, all having names enabling us to tell the function unambiguously what argument we want to specify.

(Mandatory arguments often also have names, but the argument order avoids ambiguities.)

This function can be used to repeat each vector element instead of repeating the whole vector, using the each optional argument.

rep(1:2, each = 4)[1] 1 1 1 1 2 2 2 2times and each can even be combined.

How would you generate three times the following “motif”: one pair of

How would you generate three times the following “motif”: one pair of 1 followed by one pair of 2 (the equivalent of c(1, 1, 2, 2, 1, 1, 2, 2, 1, 1, 2, 2)) using the rep function?

3.7.2 Sequences of values

We have already seen the n:m syntax to generate numbers from n to m. The seq function offers more control on the way the sequence is to be generated thanks to its by optional argument:

seq(0, 8, by = 2)[1] 0 2 4 6 8The first two arguments are the starting and ending elements. You can also specify them using the to and from argument names. The by argument is the increment. One can use this to generate sequences of non-integral numbers:

seq(-1, 1, by = 0.25)[1] -1.00 -0.75 -0.50 -0.25 0.00 0.25 0.50 0.75 1.00Or, using all arguments’ names:

seq(from = -1, to = 1, by = 0.25)[1] -1.00 -0.75 -0.50 -0.25 0.00 0.25 0.50 0.75 1.003.7.3 Sorting values

If you have a vector containing numbers or strings, it is possible to sort the values using the sort function:

ages_toy[1] 34 4 18 5 56 41 23 101sort(ages_toy)[1] 4 5 18 23 34 41 56 101In the case of a vector of strings, the order is the “lexicographic” order, i.e. using similar sorting rules as in a dictionary:

bikes[1] "Pinarello" "BMC" "Giant" "Cannondale" "Kona"

[6] "Specialized"sort(bikes)[1] "BMC" "Cannondale" "Giant" "Kona" "Pinarello"

[6] "Specialized"There are however some subtleties. For instance, capital letters come before small letters. Strings representing numbers come before letters. This is due to how characters are encoded into numbers for the computer.

sort(c("a", "A", "b", "B", "1", "2"))[1] "1" "2" "a" "A" "b" "B" Be careful when sorting strings containing special characters. The order between special characters may depend on how the computer is set up.

Be careful when sorting strings containing special characters. The order between special characters may depend on how the computer is set up.

The sort function has a decreasing optional argument. To reverse the order, use decreasing = TRUE

sort(ages_toy, decreasing = TRUE)[1] 101 56 41 34 23 18 5 4sort(bikes, decreasing = TRUE)[1] "Specialized" "Pinarello" "Kona" "Giant" "Cannondale"

[6] "BMC" 3.7.4 Taking decisions using Booleans to make new vectors

Booleans are at the basis of the art of taking decisions.

Suppose we have a grade between 0 and 20 (for instance, 12):

grade <- 12Now we want to decide whether the student who got this grade "passed" or "failed".

The ifelse function allows us to take a decision (i.e. generate a value among several possibilities) based on a Boolean value given as first argument.

The result will be the second argument if the first is TRUE. Otherwise it will be the third.

ifelse(TRUE, 100, 0)[1] 100ifelse(FALSE, 100, 0)[1] 0Use it to generate a character string that will be either "passed" or "failed".

This can also be applied to a vector of grades, and produce a vector of "passed" or "failed" verdicts.

Let’s generate a (uniformly distributed) random sample of 10 grades between 0 and 20, using the sample function:

grades <- sample(0:20, 10, replace = TRUE)

grades [1] 8 13 10 11 17 0 12 20 17 3The first argument is the set of values from which we sample, the second argument is the number of values we want, and replace = TRUE is to allow the same element to be sampled more than once.

And now lets take decisions for all those 10 students at once:

ifelse(grades >= 10, "passed", "failed") [1] "failed" "passed" "passed" "passed" "passed" "failed" "passed" "passed"

[9] "passed" "failed"This operation can be decomposed in two steps:

- Buid a Boolean vector indicating whether grades in the

gradesvector are at least 10 (TRUE) or not (FALSE).

passed <- grades >= 10- Give this Boolean vector as first argument of

ifelse:

ifelse(passed, "passed", "failed") [1] "failed" "passed" "passed" "passed" "passed" "failed" "passed" "passed"

[9] "passed" "failed"4 String manipulations

4.1 Searching strings

The grep function takes two arguments: a pattern and a vector of strings. It returns the indices in the vector where the string matches the pattern:

week <- c("Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday", "Sunday")

grep("day", week)[1] 1 2 3 4 5 6 7grep("sd", week)[1] 2 3 4This is typically used to extract those strings by vector sub-setting:

week[grep("day", week)][1] "Monday" "Tuesday" "Wednesday" "Thursday" "Friday" "Saturday"

[7] "Sunday" week[grep("sd", week)][1] "Tuesday" "Wednesday" "Thursday" 4.1.1 Grep variants

grep accepts optional arguments.

Use value = TRUE to directly extract the values instead of the indices:

grep("day", week, value = TRUE)[1] "Monday" "Tuesday" "Wednesday" "Thursday" "Friday" "Saturday"

[7] "Sunday" grep("sd", week, value = TRUE)[1] "Tuesday" "Wednesday" "Thursday" Use ignore.case = TRUE to be case-insensitive:

grep("tu", week, ignore.case = TRUE)[1] 2 6There is also another function, grepl, that returns a vector of Booleans (with TRUE where the expression is found, FALSE otherwise) instead of the indices.

Use it to count the number of strings containing

Use it to count the number of strings containing "day" in the names of the days of the week.

Now use it to find the days of the week not containing

Now use it to find the days of the week not containing "day".

4.2 Combining strings

We can create longer strings from several strings with the paste function:

paste("I", "can", "paste", "words", "together")[1] "I can paste words together"The above use of paste results in a single string where the given string arguments are separated by spaces (" ").

It is possible to use a different separator to join the strings given to paste, thanks to its sep optional argument:

paste("I", "can", "paste", "words", "together", sep = "")[1] "Icanpastewordstogether""" is an empty string. Here, we tell the function paste to insert nothing between the words (a convenient shortcut to do just that is the function paste0).

This function may look a bit useless for the moment: We could directly type "I can paste words together". However, in more advanced situations, paste can be useful to automatically generate strings (for instance to use in figure legends).

paste can also act on vectors:

paste(c("WT", "WT", "KO", "KO"), c(1, 2, 1, 2), sep = "_")[1] "WT_1" "WT_2" "KO_1" "KO_2"You obtain a vector of strings whose elements result from pairwise concatenation of the corresponding elements from the original vectors.

Use the

Use the rep function to create the arguments of paste in the above example.

Value recycling happens when you use vectors of different lengths. This can be useful to quickly generate series of numbered strings, as follows:

paste("sample", 1:3, sep = "_")[1] "sample_1" "sample_2" "sample_3"Here, the first argument is actually a vector with only one element, which is re-used for concatenation with each of the elements from the second argument.

If you want to paste together elements contained in a vector, you can use the collapse argument, as follows:

paste(week, collapse = ", ")[1] "Monday, Tuesday, Wednesday, Thursday, Friday, Saturday, Sunday" You may need to create text where elements are separated by tabulations and having several lines (a very common way to organize scientific data). This can be achieved using special characters for the

You may need to create text where elements are separated by tabulations and having several lines (a very common way to organize scientific data). This can be achieved using special characters for the sep argument: "\t" for the tabulations and "\n" for the new lines.

Suppose we have vectors containing information about gene locations in a genome:

g1 <- c(chrom="I", start="4118", end="10230",

name="WBGene00022277", score=".", strand="-")

g2 <- c(chrom="I", start="10412", end="16842",

name="WBGene00022276", score=".", strand="+")

g3 <- c(chrom="I", start="17482", end="26778",

name="WBGene00022278", score=".", strand="-")(The above represents the genomic location of three genes in the genome of the nematode C. elegans, in BED format.)

Let’s try to make a textual representation of these vectors, as they would appear in a tab-separated file:

# Create some tab-separated lines

header <- paste(names(g1), collapse = "\t")

line1 <- paste(g1, collapse = "\t")

line2 <- paste(g2, collapse = "\t")

line3 <- paste(g3, collapse = "\t")

# Assemble the lines in a multi-line text

# (BED format doesn't use headers)

file_content <- paste(line1, line2, line3, sep = "\n")

file_content[1] "I\t4118\t10230\tWBGene00022277\t.\t-\nI\t10412\t16842\tWBGene00022276\t.\t+\nI\t17482\t26778\tWBGene00022278\t.\t-"4.3 Displaying text

The way the previous piece of text is displayed in the R console is quite literal: the special characters have not been interpreted as such. You can display the text with special characters “interpreted” using cat:

cat(file_content)I 4118 10230 WBGene00022277 . -

I 10412 16842 WBGene00022276 . +

I 17482 26778 WBGene00022278 . -cat not only prints character strings, but can also perform concatenation (as its name suggests), like paste: If you give several strings as arguments, they will be joined using a space (" ") or whatever other string you use as the sep argument:

# Let's add the header this time:

cat(header, line1, line2, line3, sep = "\n")chrom start end name score strand

I 4118 10230 WBGene00022277 . -

I 10412 16842 WBGene00022276 . +

I 17482 26778 WBGene00022278 . - Please do keep in mind that

Please do keep in mind that paste creates text, while cat displays text. This is not the same thing at all. If your intention is to have text that you can use within R for other purposes (further combining strings, providing strings as arguments to a function, \(\dots\)), don’t use cat. cat should rather be used to communicate with the world outside your program (such as giving feedback to the user while the program is running, or at the end, to report about the final outcome of the task the program is meant to perform).

5 INTERMISSION: How to get help

5.1 Internal documentation

R contains a documentation system, that you can visualize in the “Help” tab of the bottom-right pane.

To dive in R the documentation:

help.start()Getting help on a particular matter:

?TRUE

?grep

?paste # Note the default value for sep

?sample

?ReservedHelp documentation in R is separated in various sections (“Description”, “Usage”, “Arguments”, “Details”…). Sections such as “Examples” and “See also” are particularly useful when discovering R and extending your R vocabulary.

If you don’t recall exactly the function you are looking for, ?? can make a search for you:

??operators

??syntax

??csv5.2 Describe your environment

You can see all the variable defined in your environment looking at the “Environment” tab of the top-right pane in RStudio, or list them using the ls command:

ls()You can obtain information about your current session (loaded packages with version number, …) using sessionInfo:

sessionInfo()These two commands can be useful to debug cases where unexpected things happen.

When you try to get help from other people, give them the information provided by sessionInfo() This may help them understanding what is happening on your computer.

6 Exporting and importing data

We have briefly seen how to load a data set into R. It is now time to get into more details on this very essential subject.

6.1 A brief overview of tabular formats

Commonly used formats to store tabular data are the following:

- TSV (“tab-separated values”)

- CSV (“comma-separated values”)

- XLSX (Microsoft Excel spreadsheet format since 2007)

If you need to exchange data with bioinformaticians, knowing a few things about these formats may help ensure a smoother communication.

In particular, you need to be aware of fundamental differences between a simple text format and more complex spreadsheet formats.

6.1.1 “Simple text” formats

“Simple text” means that the files using these categories of format are directly encoded using relatively simple and well defined norms meant to represent text (such as ASCII or UTF-8), and can be opened with a general category of tools called text editors.

The basic and essential functionality of a text editor is to change the characters contained in a simple text file. This can be done in more or less sophisticated ways, but that’s all there is to it. Once the file has been saved and closed, it can in principle be opened and edited using any other text editor.

Simple text files have a long history and a wide diversity of applications. For instance, they are used to store the source code of computer programs. As such, an R script should be saved in simple text format, and the editor of RStudio is actually a text editor. It was likely not developed with this goal in mind, but you can easily use it to edit a Python program if you want (the code will not run in the console, of course, but that’s something different).

Text editors are not to be confounded with word processors.

Text editors are not to be confounded with word processors.

Simple text formats differ by the conventions used to organize the information they contain. Some of them are meant to represent tabular data, in particular TSV and CSV.

6.1.1.1 TSV format

Files in TSV format, as the name suggests, consist in simple text format, where columns are separated by the special tabulation character ("\t"). For instance:

Column1 Column2 Column3

Value1 Value2 Value3(Note the larger intervals between values, due to tabulations, ensuring their alignment with column headers.)

The main strengths of TSV format are the following:

It is simple, and therefore easy to handle in computer programs (among which some widely used and powerful command-line interface utilities).

It is rather unlikely that the

"\t"separator will be needed inside the textual data present in the columns, thereby making it suitable for most simple tabular information.When displayed on a command-line interface, the wide space taken by the

"\t"separator will make the column delimitation more visible than when using commas.

6.1.1.2 CSV format(s)

Files in CSV format are also simple text files, where the column separator is, strictly speaking, the comma (","). For instance:

Column1,Column2,Column3

Value1,Value2,Value3However, this is also sometimes used to refer more generally to other simple text formats, including TSV and CSV2 (where the separator is a semicolon (";")).

In CSV format, textual data is sometimes placed between quotes in order to allow the presence of the column separator character within textual information.

The CSV2 format uses the semicolon instead of the comma as column separator, in order to enable the use of the comma as decimal separator inside columns containing numeric information.

Example using both text between quotes and semicolon separators:

ID;Info;Coeff

"A";"This text field contains spaces, commas and semicolons: ;";0.666.1.2 “Complex” formats

The XLSX format actually consists in a compressed archive containing various files. This enables the storage of complex information, such as formatting properties (colours, fonts), embedding of graphics, file modification history\(\dots{}\) This format was developed for a specific spreadsheet application (Excel), but organized and documented in such a way that other applications could also be made to use it (this was more complicated with the earlier XLS format).

A consequence is that complex spreadsheet applications are needed in order to handle files in this format. Another is that such files tend to take much more storage space than simple text ones.

If you don’t need the advanced features associated with XLSX format, you’ll save disk space and make the work of your bioinformatician colleagues simpler by using TSV or CSV.

If you don’t need the advanced features associated with XLSX format, you’ll save disk space and make the work of your bioinformatician colleagues simpler by using TSV or CSV.

6.2 Exporting tables from spreadsheet applications

In spreadsheet applications (such as LibreOffice, OpenOffice, Excel\(\dots\)) you can export your spreadsheets in a format which is bioinformatics friendly such as TSV or CSV.

In your favourite spreadsheet application you should have in the “File” menu a “Save as” or “Export as” option which allows you to export your data to TSV or CSV format.

Simple text formats do not handle multiple spreadsheets at once, so normally a warning will be issued by your application saying that only the current/active spreadsheet will be saved.

Simple text formats do not handle multiple spreadsheets at once, so normally a warning will be issued by your application saying that only the current/active spreadsheet will be saved.

You may also voluntarily restrict what you export by selecting a particular zone of the table before actioning the menus. The actual behaviour may depend on the software you are using.

You may also voluntarily restrict what you export by selecting a particular zone of the table before actioning the menus. The actual behaviour may depend on the software you are using.

6.3 Checking the exported table

To properly import a table in R, you need to make sure everything is under control. In particular, you should check that you have an answer for each of the following questions:

- What is the column separator?

- Are there column headers?

- Are text fields quoted?

- What decimal separator is used?

- Are there commented lines, and if so, how are comments indicated?

You may have had full control on the export process, in which case the answers should be known to you.

You can also check a posteriori, by opening the exported file. You should be able to do it using TextEdit (on Mac OSX) or Notepad (on Windows), or any text editor of your choice.

If you happen to be skilled enough with command-line utilities, you can of course also use them to get the answers.

6.4 Importing tables in R

6.4.1 Using the graphical interface:

As we saw before, in RStudio we can import both CSV and TSV files using the “Import Dataset” menu and choose “From Text (base)\(\dots\)”. Then check that the options correspond to how your file is formatted.

6.4.2 Using the console:

TSV and CSV files can be imported as data frames using the read.table function. Depending on the actual format, some arguments will have to be changed. There are also shortcut functions more readily suited to a given format, that are very similar to read.table but with different default values for the optional arguments.

You can get information about these functions and their arguments by reading the help page for read.table:

?read.tableSome potentially useful arguments are the following:

sep, to specify the column separator.header, to indicate that the first line contains column headers.quote, to specify the character used to delimit text fields.dec, to specify the character used as decimal point in numbers.skip, to use in case some lines have to be ignored at the beginning of the file.comment.char, to specify one or more characters that indicate comments.row.names, to specify a column number that should be used as row names instead of as a standard data column.stringsAsFactors, to indicate whether text columns should be converted into factors or not.

The read.csv and read.delim variants of read.table assume that the first line contains column headers (header = TRUE) and use a comma (sep = ",") or a tabulation (sep = "\t") as column separators, respectively.

7 Descriptive statistics on vectors

Statistical analysis has several phases, among which:

- Data collection.

- Descriptive statistics (or “exploratory analysis”): characterizing the data.

- Inferential statistics: extrapolating from the data (to estimate confidence intervals, to test hypotheses\(\dots\)).

The third phase will be nourished by information obtained during the second.

In practice, a series of measures (collected for a certain number of samples, for instance) will typically be represented using a vector in R. Those vectors will very often be columns in a data frame reporting several series of measures for the same sampled individuals. For the moment, we will only deal with one vector at a time.

7.1 Samples and random variables

During the collection phase, individuals from a population are sampled. One (or several) characteristic(s) of interest are recorded. The measure of a characteristic of interest varies between individuals. Each one of these observations can be viewed as a realization of a random variable.

For instance, if a mouse is taken from the lab’s collection and its weight is measured, a certain value will be recorded. If another mouse is taken (or the same but at another time), and its weight is measured, a possibly different value will be recorded. This process of selecting a mouse and measuring its weight involves many causes of variability (hence the term “variable”), that are not predictable (hence the qualification as “random”). A sample of values is constituted by the repetition of the same process, and is therefore seen as a series of realization of the same random variable.

Before diving into more theory, let’s do a very practical exercise.

We prepared for you a small table in the form of a XLSX-formatted file. Download it from the link, and try to convert it in a format suitable for loading into R.

We prepared for you a small table in the form of a XLSX-formatted file. Download it from the link, and try to convert it in a format suitable for loading into R.

Now use the

Now use the read.csv function to load it as a mice data frame into R. See ?read.csv for some help.

Let’s extract from this table the column containing the sexes of the mice:

mice_sexes <- mice$sexTo get a smaller data frame containing only the data for males, do the following (you will have to wait the chapter about data frames for explanations):

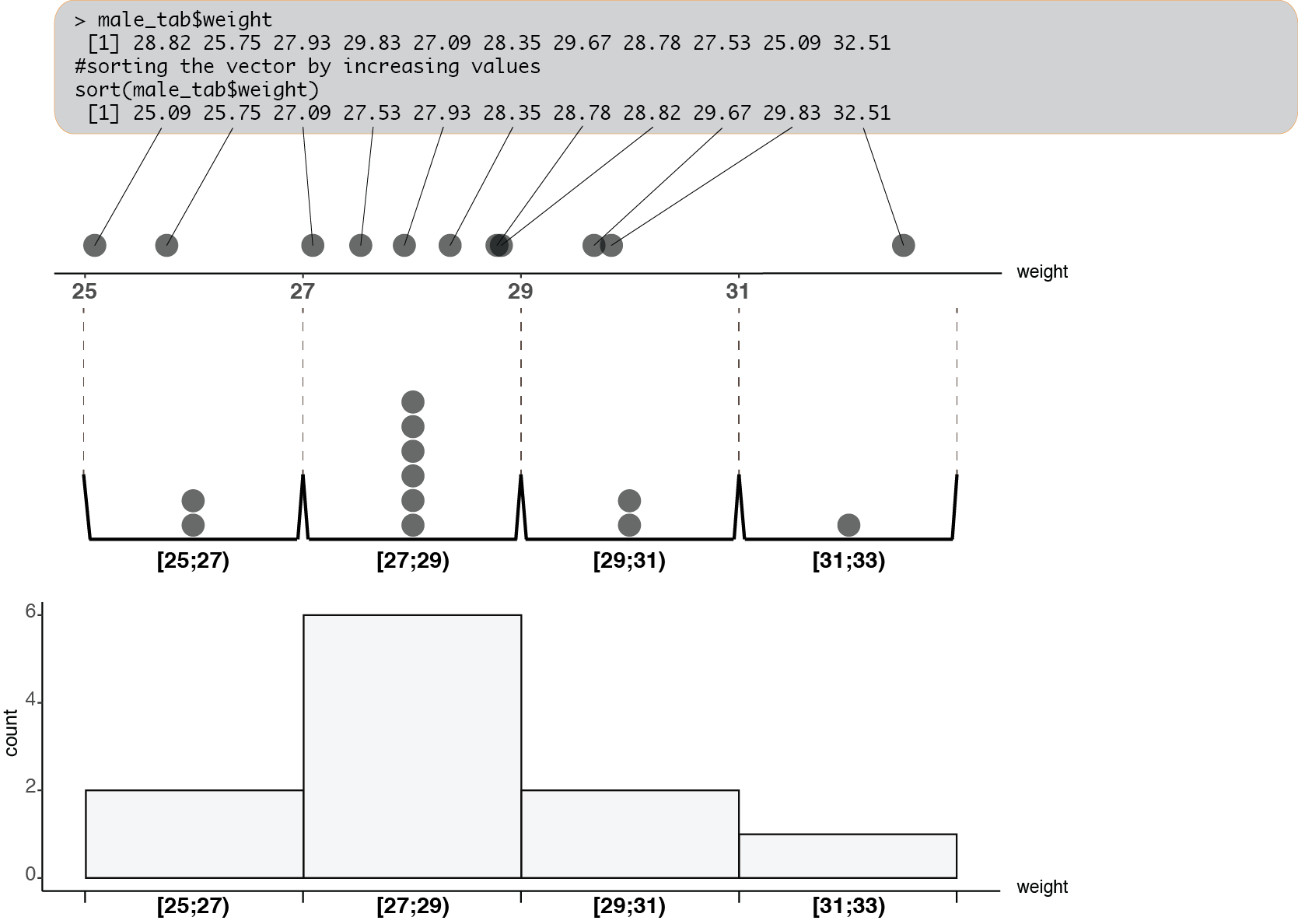

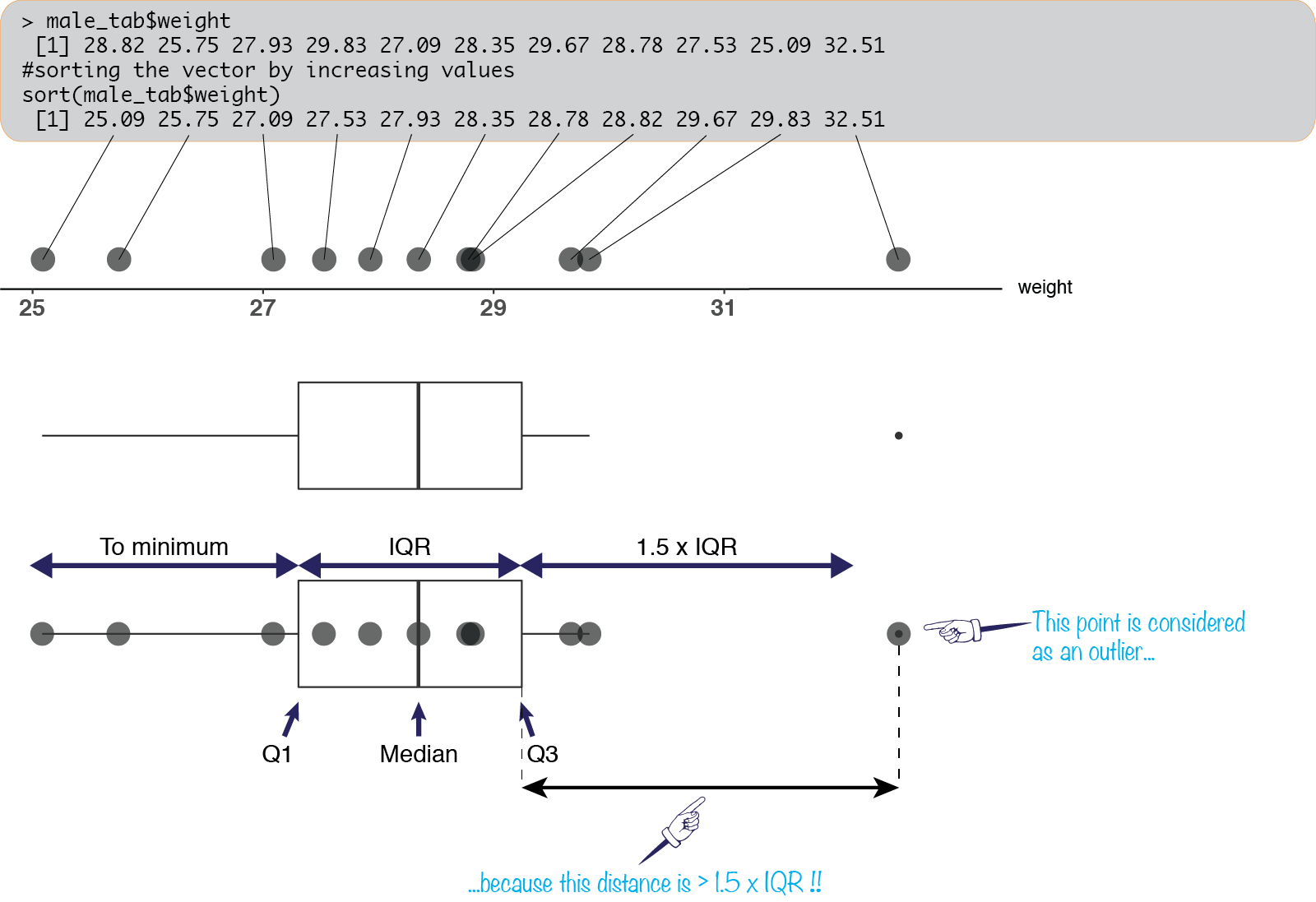

male_tab <- mice[mice_sexes == "M",]We can quickly visualize the sampled weights, sorted using sort:

sort(male_tab$weight) [1] 25.09 25.75 27.09 27.53 27.93 28.35 28.78 28.82 29.67 29.83 32.51We have seemingly random values between \(25\) and \(33\).

7.1.1 Types of random variables

Depending on the nature of the data, the values can fall into different categories of random variables.

The data can be:

- quantitative: Quantities encoded by numbers.

- qualitative: Qualities encoded with arbitrary labels (sometimes called factors in R).

Quantitative random variables can be:

- continuous: Measures falling within a continuous range of numbers (example: size of a person).

- discrete: Countable quantities, corresponding to integral numbers (example: number of children a person has).

Qualitative random variables can be:

- ordinal: A meaningful order can be defined between the different possible labels. This often results from an underlying quantitative variable (example: amount of physical activity labelled using levels such as “low”, “high” or “medium”).

- categorical (also called nominal): There is no meaningful order (example: eye colour).

Note that in a broader sense, qualitative variables are discrete. Indeed, their possible values are not numbers, but they can take a countable number of values.

As a consequence, a number of statistical tools will be equally applicable to discrete quantitative variables and to qualitative variables.

Ordinal qualitative variables are actually quite similar to discrete quantitative variables. It is easy to associate successive integers (1, 2, 3, \(\dots\)) to successive levels of an ordinal qualitative variable.

It is sometimes possible to view the same data in several ways, and have different options to encode them.

male_tab$weight and mice_sexes both are vectors of data. In terms of the categories we just described, what kind of random variable would you associate to each of them?

7.1.2 Population and sample

In statistics, we usually don’t have access to the population we want to study. We only have access to a fragment of it, the sample.

We are going to use the information from this sample to extract (or, more accurately, to infer) general rules about the population. These rules are what is often called a model.

The choice of the model and its parameters will be based on various considerations, including observations made on the samples using descriptive statistics.

7.2 Distributions

All sorts of operations can be done to describe a sample of values, which can easily be executed using functions in R.

7.2.1 Describing quantitative data

7.2.1.1 The histogram

A quick and easy thing to do is to plot a histogram, using the hist function.

We already did it for the ages recorded in the Milieu Intérieur data a long time ago. Let’s do it again:

hist(ages)The \(x\)-axis displays the range of the values in the studied sample. It is split into non-overlapping contiguous intervals (usually of equal lengths). On the above graph, the intervals are bins of 5 years each, i.e. \([20-25)\), \([25-30)\dots\)

Never plot a histogram for qualitative data! The \(x\)-axis of a histogram is indeed meant to represent a continuous range of numerical values. For discrete data, a barplot is usually more suited (we’ll see how to do this very soon).

Never plot a histogram for qualitative data! The \(x\)-axis of a histogram is indeed meant to represent a continuous range of numerical values. For discrete data, a barplot is usually more suited (we’ll see how to do this very soon).

The \(y\)-axis represents the amount of data in each one of the intervals, and is labelled “Frequency”.

For finer control on the representation, the breaks optional argument allows us to specify the boundaries of the bins.

Let’s try it using our small mice weights example:

hist(male_tab$weight, breaks = seq(from = 25, to = 33, by = 2))Here, we made bins of width \(2\), thanks to the by argument of the seq function.

The width of the bins is a compromise between accurately representing the data (thin bins), and effectively summarizing (wide bins).

Here are two other possible choices:

hist(male_tab$weight, breaks = seq(from = 25, to = 33, by = 0.25))hist(male_tab$weight, breaks = seq(from = 25, to = 33, by = 4))\(2\) looks like a good compromise.

Let’s look more into details how a histogram is made. The data points are “distributed” in the bins according to their values:

This kind of graphical representation informs us about the distribution of the values in the vector: where values can be found, how broadly they are spread, where they are more concentrated\(\dots\)

Let’s have a quick look at some other measures recorded in our mi data frame.

hist(mi$HeartRate)hist(mi$Temperature)The histogram representation reveals striking differences between the distributions of those random variables, and that of the ages.

7.2.1.2 Numerical summaries

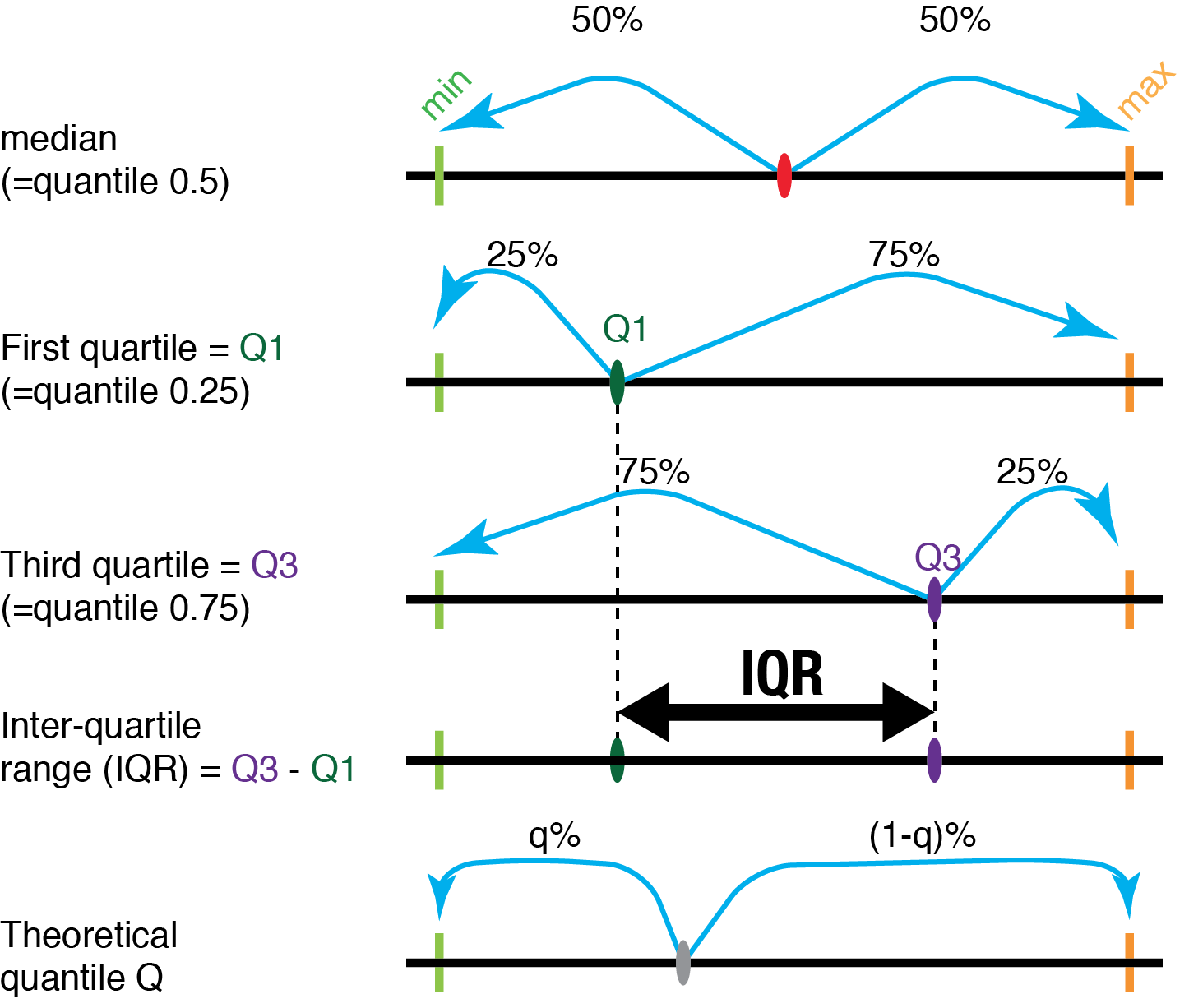

A distribution can also be characterized using numbers, such as the mean value, and others. Some of these numbers can be obtained using the summary function, in the form of a named vector:

summary(ages) Min. 1st Qu. Median Mean 3rd Qu. Max.

20.17 35.83 47.71 46.49 58.35 69.75 summary(mi$HeartRate) Min. 1st Qu. Median Mean 3rd Qu. Max.

37.00 54.00 58.00 59.21 65.00 100.00 summary(mi$Temperature) Min. 1st Qu. Median Mean 3rd Qu. Max.

35.70 36.20 36.40 36.43 36.60 37.70 We will soon learn in more details what all these values mean.

We can see that these summary values differ. This reflects differences in the shapes of the histograms and in values on their \(x\)-axes.

The histogram and these summary values are our first step at practicing descriptive statistics.

7.2.2 Describing qualitative data

Now what happens in the case of discrete or qualitative variables?

We can try the summary function on other columns of the data frame:

smoking <- mi$Smoking

summary(smoking)Active Ex Never

161 223 432 sex <- mi$Sex

summary(sex)Female Male

417 399 summary gives different kinds of results, depending on the kind of data it received. Here, the data was encoded as factors, a kind of value in R that we haven’t discussed in details yet. Factors are very well suited to represent qualitative variables. This is what is obtained by default for columns containing text in a table loaded in R.

When given factors summary gives the number of individuals falling into each category, or level.

7.2.2.1 Counting using table

We can obtain the same information (in a slightly different form) using the table function:

table(smoking)smoking

Active Ex Never

161 223 432 table(sex)sex

Female Male

417 399  Contrary to

Contrary to summary, table works the same with any kind of vector, not just factors. It can be used to count Booleans, strings, numbers\(\dots\) Use it!

Values from a table can be extracted using the corresponding labels, like with named vectors:

counts_smoking <- table(smoking)

counts_active <- counts_smoking["Active"]

counts_activeActive

161 counts_inactive <- counts_smoking[c("Ex", "Never")]

counts_inactivesmoking

Ex Never

223 432  How would you obtain the total number of inactive smokers ?

How would you obtain the total number of inactive smokers ?

Using table, a contingency table can be created to highlight the interrelation between two variables. In our case, we can create such a contingency table to link the two variables: smoking habits and sex.

table(mi$Sex, mi$Smoking)

Active Ex Never

Female 77 95 245

Male 84 128 187This table displays the number of individuals observed to have a particular combination of factors. This type of table is used to study the association between two kinds of classification (for instance, classification according to the sex, and classification according to the smoking habits). It is used in particular in Fisher’s exact test.

For the contingency table to make sense, the arguments should be vectors corresponding to measures made over the same series of individuals. In particular, the order of the individuals should be the same in all the vectors.

For the contingency table to make sense, the arguments should be vectors corresponding to measures made over the same series of individuals. In particular, the order of the individuals should be the same in all the vectors.

7.2.2.2 The barplot

To visualize the distribution of categorical data, the barplot function can be used with a table of counts. The result is a graph where each category will be represented by a bar:

barplot(counts_smoking)barplot(table(sex)) Do not use barplots for non-discrete data! Barplots are not histograms:

Do not use barplots for non-discrete data! Barplots are not histograms:

- The bars are not contiguous.

- The order of the categories on the \(x\)-axis of a barplot can be changed, not the order of the bins in a histogram.

7.2.3 From data distribution to population model

One important use of descriptive statistics is to help us choosing the correct tools for later analyses. One aspect is to characterise our samples in terms of the distribution of its values. The distribution of the values in the sample may then suggest ways to model the distribution of the values in the population. This distribution model will be an essential tool in later phases of statistical analysis.

The word “distribution” can be used for two different but related ideas:

The word “distribution” can be used for two different but related ideas:

- How the values are distributed in a sample (empirical distribution).

- How the values would be distributed if the random variable from which our sample was observed behaved according to certain rules, to a certain model (theoretical distribution).

In the latter sense, a distribution is characterized in terms of probabilities. What is the probability to observe a given value, or to observe a value within a given range?

We will now present some common ways to obtain a distribution to model a random variable.

7.2.4 Discrete distributions

Discrete distributions will be preferred to model naturally discrete quantities (such as numbers of heads on hydras or numbers of popes at certain times in history) that will be represented using integral numbers. They can also be used for some continuous aspects of the physical world that are typically modelled using discrete classes, such as colours (the spectrum of radio-frequencies is continuous, but it is often more practical to score observations according to a discrete set of colours).

A model for a discrete distribution would consist in associating each possible value with the probability of obtaining it when randomly sampling an element from the population.

For instance, the “Milieu Intérieur” data frame contains information regarding individual’s relationships with smoking. This is scored using three categories (a factor with three levels, in R terminology):

Active, for active smokers;Ex, for people that used to smoke but no longer do;Never, for people that never got into smoking.

If we wanted a model for this random variable, we could associate each category with a number, between \(0\) and \(1\), and such that the three numbers sum to \(1\) (this is a required property for probabilities). Determining the values for these numbers could be done in various ways (using a priori knowledge, estimating the values from the sample, \(\dots\)).

For instance, we could just use the proportions of each category in the sample as probabilities for our model. This can be computed as follows:

counts_smoking <- table(smoking)

counts_smokingsmoking

Active Ex Never

161 223 432 nb_observations <- sum(counts_smoking)

nb_observations[1] 816proportions_smoking <- counts_smoking / nb_observations

proportions_smokingsmoking

Active Ex Never

0.1973039 0.2732843 0.5294118 Besides using such empirical probabilities to build a model, there are various well studied classical discrete distributions available: Bernoulli, binomial, uniform, Poisson\(\dots\)

Their ability to be mathematically described reflect some “rules”, some regularities or mechanisms, in the process underlying the random variable to which they are associated. This is what makes them useful to extrapolate beyond simple empirical probabilities. Indeed, empirical probabilities will fit the sampled data perfectly, but these probabilities will likely not reflect what happens at the whole population level (especially if the sample is small), resulting in poor behaviour in later phases of statistical analyses. This is sometimes called “over-fitting”.

We will now briefly present a few classical and simple discrete probability distributions.

7.2.4.1 Uniform distribution

This represents the probabilities of equally probable discrete events. Under such a distribution, if there are \(N\) mutually-exclusive events, each one has a probability of \(\frac{1}{N}\). The only parameter needed to use this distribution as a model is \(N\), the number of possible events.

For instance, the outcome of the throw of a standard dice is quite well modelled by a uniform distribution with 6 equally probable events (that we can label using the numbers \(1\) to \(6\)):

7.2.4.2 Bernoulli distribution

This represents the probabilities of a pair of mutually exclusive events. This very simple model has one parameter: the probability of one of the events (that is typically noted \(p\)). The probability of the other event is then \(1 - p\).

For instance, if we are interested in the probability of left-handedness, someone randomly drawn from a population could be either left-handed (\(L\)) or not (\(\bar{L}\)).

The following would be the distribution of probabilities if the probability of left-handedness was \(\frac{1}{10}\):

If we wanted a model for the

If we wanted a model for the Sex column of our mi data frame using a Bernoulli distribution, we could use the proportion of females as parameter p. Compute this proportion using the sex vector.

7.2.4.3 Binomial distribution

This represents the probabilities of achieving a certain number of times a given outcome in a series of drawings from the same Bernoulli distribution. This model takes two parameters: the number of drawings, and the probability of the outcome of interest in the underlying Bernoulli distribution.

For instance, assuming a coin flip can either result in a head or a tail, with equal probability (and nothing else), the probability of obtaining a head would be \(\frac{1}{2}\). (This is a case of discrete uniform and Bernoulli distribution at the same time.)

The probabilities of obtaining a certain number of tails in 5 coin flips can therefore be modeled using a binomial distribution with 5 events:

7.2.5 Continuous distributions

Physical measures such as sizes, weights, or durations are continuous by nature. Assuming an arbitrarily high precision, they could take any value between a pair of (possibly infinite) real numbers.

For such measures, each value is one possibility among an infinity of others. It is therefore not useful to associate individual probabilities to each possible value because the probability of one value among an infinity of other possibilities is infinitesimal.

A model for a continuous random variable should instead associate probability densities to real numbers in the (possibly unbound) range of possible values for that variable, in the form of a probability density function.

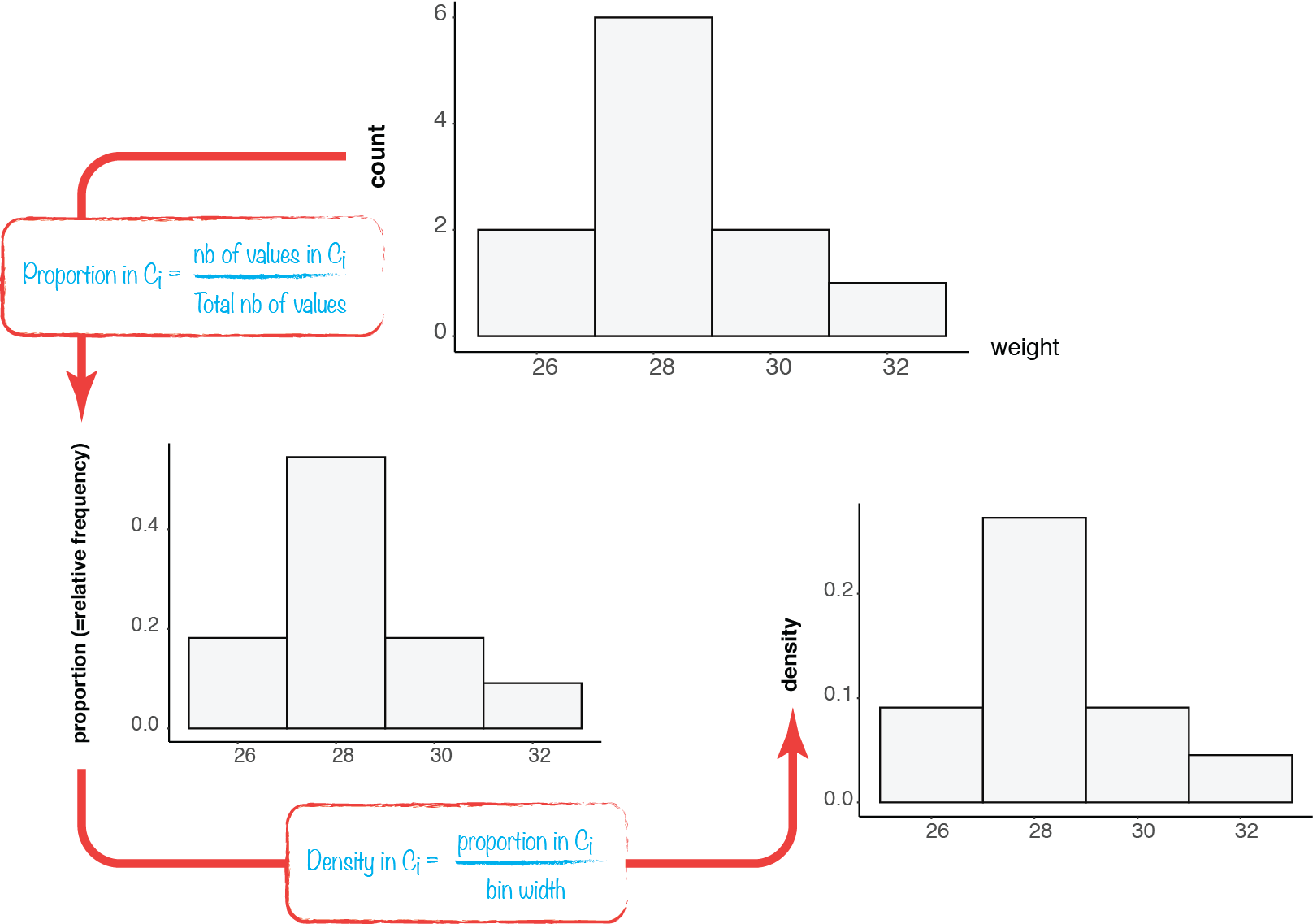

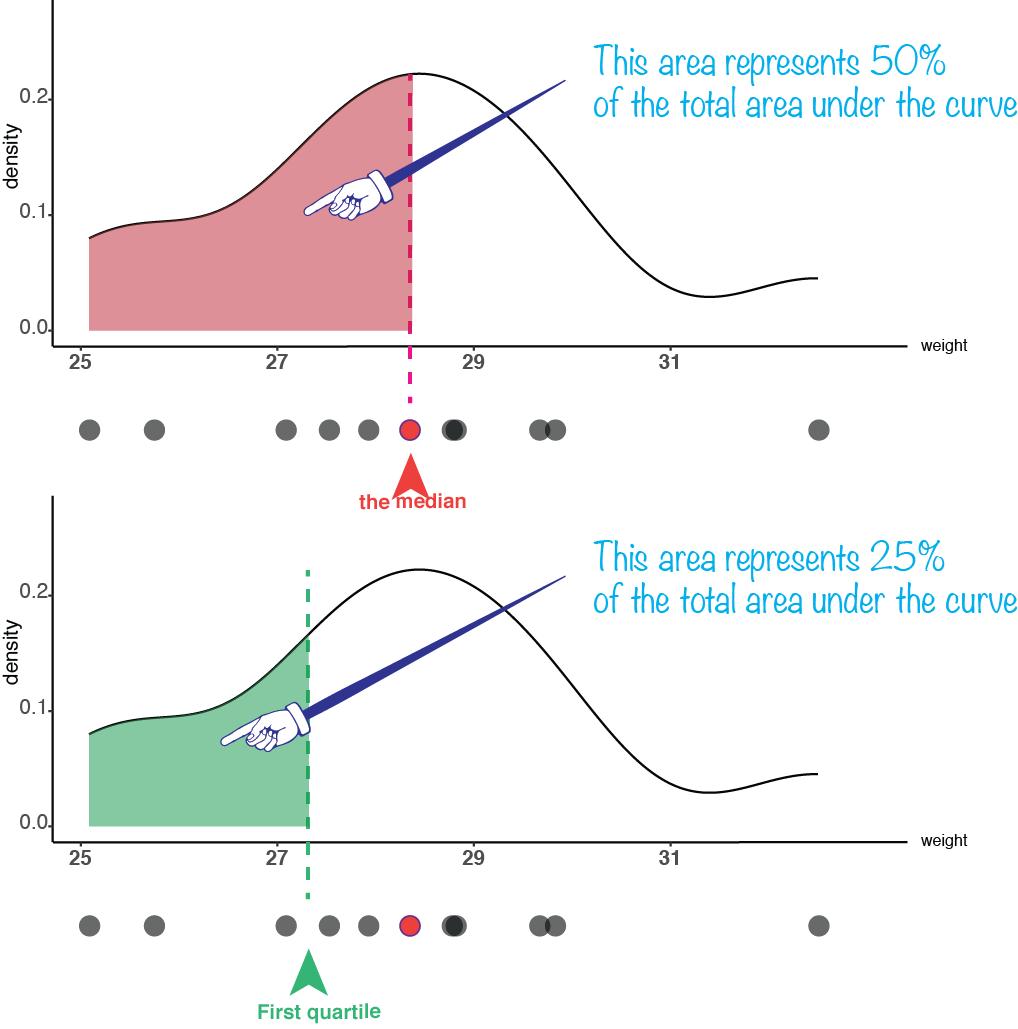

Let’s take again our male mice weights example and try to figure out what a probability density is.

As we have seen with the smoking habits example, a probability can be estimated from empirical data by taking the proportions of observations within each category. The same can be done with continuous data, using histogram bins instead of categories.

Now, a probability “density” can be derived from these per-bin probability estimates, by scaling them by the bin widths.

Here, we transform the probabilities of certain ranges of weights into a kind of probability “per unit of weight”.

Numerical analyses techniques exist that can smooth these densities to extrapolate a distribution for the underlying population. This is a way to provide an empirical model of continuous distribution for the population.

In R, the density function can make such an estimation of densities, which can be plotted using the plot function:

plot(density(male_tab$weight))(The plot function is a versatile function that tries to guess what kind of graph we want based on the nature and numbers of arguments.)

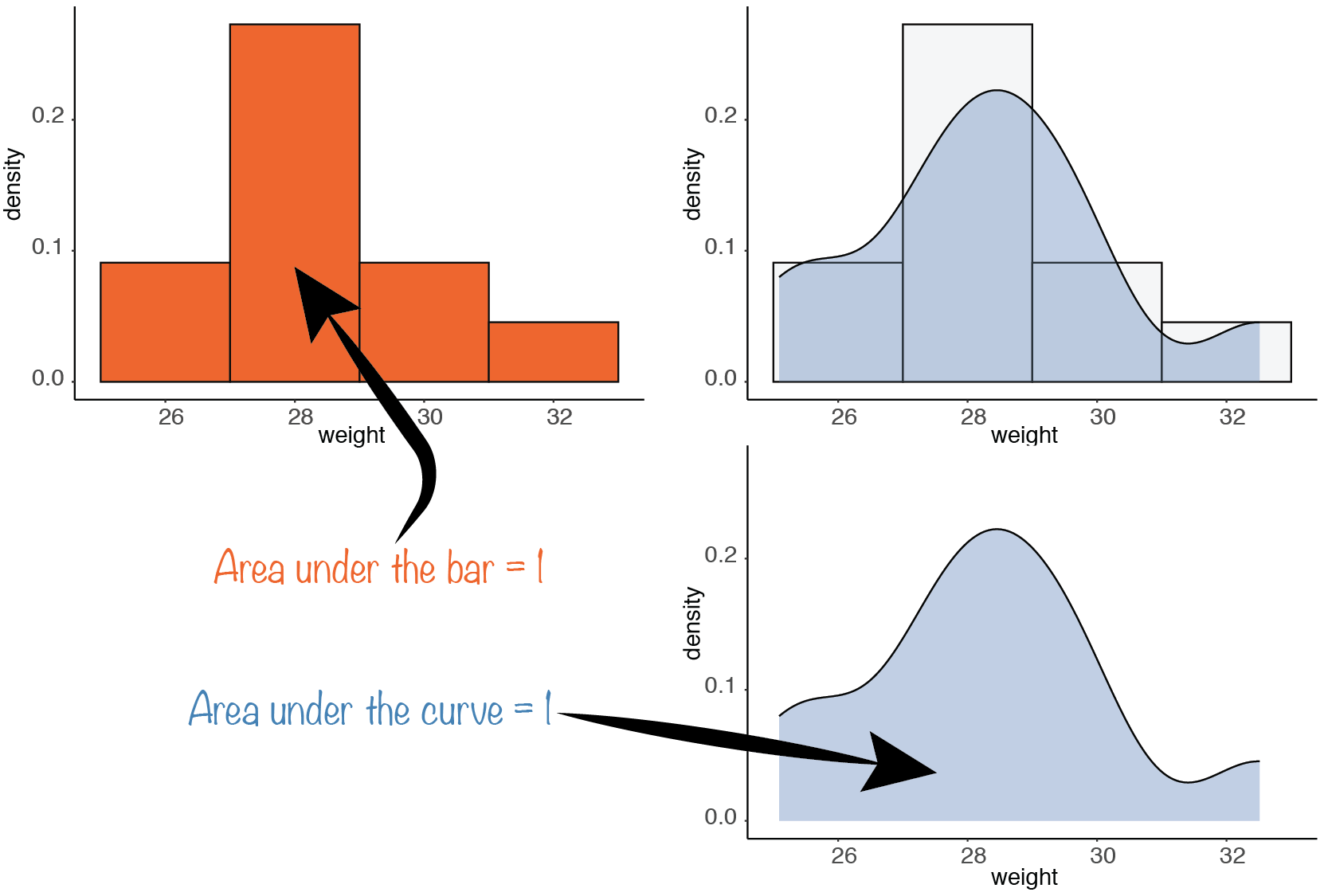

An important property is verified for both the smooth and non-smooth densities: The total area under the curve (sum of the areas of the bars, in the non-smooth case) is \(1\).

In mathematical terms, a probability density function is a positively-valued function that, once integrated between two values of its domain of definition, will give the probability to sample a value between these two boundaries. Therefore, the area below the whole curve corresponds to the probability that a sampled value will be “somewhere where values are possible”, which is \(1\).

Mathematical integration of a function can be visualized on the function’s graph as the area under the curve between the boundaries:

As we can see, on the above example, for a same interval width (\(2\)), the probability of a value between \(-4\) and \(-2\) is much lower than the probability of having a value between \(0\) and \(2\). This reflects the fact that the above probability density is higher around zero than far from it.

As was the case for discrete distributions, some classical continuous distributions exist. For instance: Continuous uniform, Gaussian (or Normal), Chi-squared, Student, Fisher\(\dots\)

They may prove helpful when trying to generalize beyond experimentally derived probability densities.

We will now briefly present a few of them.

7.2.5.1 Continuous uniform distribution

This distribution is characterized by an equal probability density all along a given interval. It is characterized by a \(min\) and a \(max\) parameters, delimiting that interval.

Here is the curve of the density function for the continuous uniform distribution on the \([-1; 1]\) interval:

The density is easy to express in terms of the \(min\) and the \(max\) parameter: \(\frac{1}{max - min}\).

Check that this is the case on the above figure, and compute the area of the rectangle delimited by the density curve and the \(x\)-axis.

7.2.5.2 Normal distribution

This is arguably the most famous family of continuous probability density functions. It is also known as “Gaussian” (from Gauss, a scientist who discovered it trying to characterize the distribution of measurement errors). It is sometimes also qualified as “Bell shaped”, for the shape of the curve of its probability density function:

This distribution is symmetrical takes two parameters, the mean (determining around what value it is centered) and the standard deviation (determining how spread it is around the mean). We will discuss those terms with more details later.

The above curve is the one for the standard normal distribution, that is, the one whose mean is \(0\) and standard deviation is \(1\).

Here is what the curves look like with mean \(0\) and standard deviations \(0.5\), \(1\) and \(2\):

If a random variable is modelled using a normal distribution, it is often useful to transform the data so that the standard normal distribution can be used.

If a random variable is modelled using a normal distribution, it is often useful to transform the data so that the standard normal distribution can be used.

This standardization can be done by simply subtracting the mean of the values, so that the values are centered around zero, and then dividing by the standard deviation of the values (more about this soon), so that the standard deviation now becomes \(1\). This can be called a scaled variable. (For French speakers, this is called “centrer et réduire”, and the result is a “variable centrée réduite”.)

This kind of transformation can be applied even if the variable does not follow a normal distribution. Just keep in mind that the result will not follow a standard normal distribution, in that case.

This kind of transformation can be applied even if the variable does not follow a normal distribution. Just keep in mind that the result will not follow a standard normal distribution, in that case.

7.2.5.3 Student’s t-distribution

This distribution was discovered by William Sealy Gosset, an employee of the Guinness brewery who published his work under the pseudonym “Student”.

Like the standard normal distribution, it is bell shaped and centered around zero. However, it is more widely spread.

It takes a parameter called “degree of freedom”, which is an integral number. The higher the degree of freedom, the lower the dispersion of the values, and the closest to the standard normal distribution.

Here is a Gaussian distribution, together with t-distributions of various degrees of freedom:

7.2.5.4 Chi-squared distribution

The Chi-squared distribution (or \(\chi^2\)-distribution) also has a parameter named “degree of freedom”. If \(df\) is the degree of freedom, the particular distribution can be noted \(\chi_{df}^2\), and is the distribution of the sum of \(df\) squared independent random variables following the standard normal distribution.

For instance, if a variable is modelled using a normal distribution, centering the values (by subtracting the mean), then normalizing (by dividing by the standard deviation), and finally squaring, would result in a random variable that is expected to follow a \(\chi_{1}^2\) distribution. This has various applications.

As a sum of squares, this probability density is only defined for positive numbers. Here are the curves for various degrees of freedom:

7.2.6 Vectors of random values

It can sometimes be useful to generate data following certain probability distributions.

R provides a family of functions whose name starts with “r”, and then something reminding the name of a theoretical distribution: rbinom for the binomial distribution, rnorm for the normal distribution, rt for Student’s t-distribution, rchisq for the Chi-squared distribution\(\dots\)

These functions generate vectors of random values drawn from the corresponding distribution.

For instance, the rnorm function generates a vector of random values from a standard normal distribution (mean 0 and standard deviation 1):

ten_norm <- rnorm(10)

ten_norm [1] -0.4764353 1.5712980 -0.5405243 -0.5895441 1.0954162 -0.6731219

[7] 0.1096119 1.0659272 0.1661113 0.2101096The argument is the number of values to draw from the distribution.

We can quickly visualize the distribution of the obtained numbers using the hist function:

hist(ten_norm)We can change the parameters of the normal distribution using the mean and sd optional arguments. mean and sd have default values of 0 and 1 respectively, so that rnorm draws from the standard normal distribution by default.

For instance, we can generate values from a normal distribution of mean \(10\) and standard deviation \(0.1\) as follows:

ten_norm_2 <- rnorm(10, mean = -4, sd = 0.1)

ten_norm_2 [1] -3.934045 -4.089639 -4.163901 -3.755335 -3.892571 -3.983323 -3.940167

[8] -4.180485 -4.114692 -3.950806The histogram should reflect the change in parameters:

hist(ten_norm_2) Use the help of the

Use the help of the rbinom function (?rbinom) to find how to generate 32 random values drawn from a binomial distribution modeling the number of tails obtain when flipping five coins. Create a flip_5_32 vector and make a barplot to represent the obtained distribution.

7.3 Location

One of the aspects by which a distribution of values can be characterized is its “central tendency”, or “location”. This is a way to estimate what a “typical” value from that distribution would be. There are several approaches to do this kind of estimation. The most commonly used location parameters are the mean and the median.

7.3.1 Arithmetic mean

The best known location descriptor is the (arithmetic) mean (or “average”) of the values. For a sample of values, it can be computed as the sum of the values, divided by the number of values.

The mean \(m\) of a sample with \(n\) values (noted \(x_1\), \(\dots\), \(x_n\)) can be expressed as follows:

\[m = \frac{x_1 + ... + x_n}{n}\] or (using the mathematical notation for a sum): \[m = \frac{\sum_{i=1}^{n}x_i}{n}\]

When using a theoretical distribution to model a population, the theoretical distribution has to be tuned using parameters. These parameters are often noted using Greek letters.

For instance, to have the theoretical distribution centered around the same values as the data, one would typically use the mean as a tuning parameter. The (unknown) mean of the population would be the ideal value for the mean parameter (noted using the Greek letter \(\mu\)). It will be estimated using the (known) sample mean (\(m\)). Such an estimated mean is sometimes noted \(\hat{\mu}\), to distinguish it from the real population mean \(\mu\). \(\hat{\mu} = m\). If the sample is not biased, \(\hat{\mu}\) for large samples will tend to be close to \(\mu\).

Estimators obtained from a sample are often noted with decorations such as \(\hat{\phantom{\mu}}\) to distinguish them from the parameters of mathematical models chosen to represent probability distributions of the population.